Deep learning is a subfield of machine learning that focuses on the development and application of artificial neural networks. It has gained significant attention in recent years due to its ability to solve complex problems and achieve state-of-the-art performance in various domains, including computer vision, natural language processing, and speech recognition. In this article, we will demystify deep learning, exploring its concepts, architectures, and applications.

Advertisement

Table of Contents

1. Introduction

Deep learning is a branch of machine learning that focuses on artificial neural networks inspired by the structure and function of the human brain. It involves training these networks with large amounts of data to learn complex patterns and make accurate predictions or decisions.

2. What is Deep Learning?

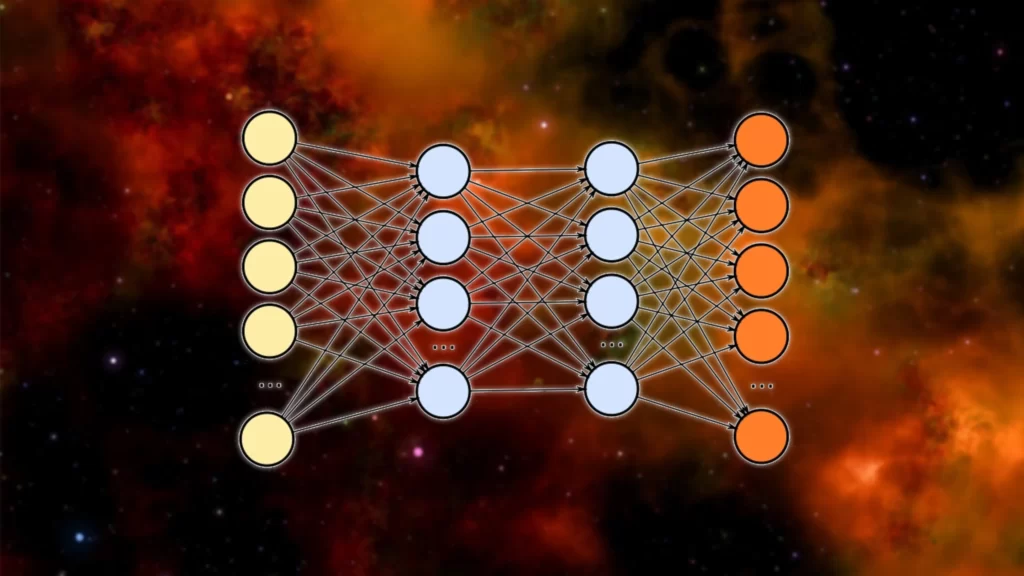

Deep learning refers to the training and application of deep artificial neural networks. These networks are composed of multiple layers of interconnected artificial neurons, enabling them to learn hierarchical representations of data. Deep learning models excel at learning intricate patterns and can automatically extract useful features from raw data.

Advertisement

3. Artificial Neural Networks

Artificial neural networks form the foundation of deep learning. Here are some commonly used types of neural networks in deep learning:

3.1 Feedforward Neural Networks

Feedforward neural networks are the simplest form of neural networks. They consist of an input layer, one or more hidden layers, and an output layer. Information flows from the input layer through the hidden layers to the output layer without any feedback connections.

3.2 Convolutional Neural Networks

Convolutional neural networks (CNNs) are designed for analyzing visual data, such as images and videos. They employ convolutional layers that capture spatial patterns by convolving filters over the input data. CNNs are highly effective in tasks like image classification, object detection, and image segmentation.

3.3 Recurrent Neural Networks

Recurrent neural networks (RNNs) are designed to handle sequential data, such as text or time series data. They use recurrent connections that allow information to flow in cycles, enabling the network to capture dependencies and context over time. RNNs are commonly used in tasks like language modeling, machine translation, and speech recognition.

3.4 Generative Adversarial Networks

Generative adversarial networks (GANs) consist of two neural networks: a generator network and a discriminator network. The generator network generates synthetic data, while the discriminator network tries to distinguish between real and synthetic data. GANs are used for tasks like image generation, data synthesis, and style transfer.

4. Training Deep Neural Networks

Training deep neural networks involves the following key components:

4.1 Backpropagation

Backpropagation is a crucial algorithm for training deep neural networks. It calculates the gradients of the network’s parameters with respect to the loss function and updates the parameters using gradient descent. Backpropagation enables the network to learn from the discrepancies between its predictions and the ground truth.

4.2 Activation Functions

Activation functions introduce non-linearities to neural networks, enabling them to model complex relationships in data. Common activation functions include the sigmoid, tanh, and ReLU (Rectified Linear Unit) functions. Choosing appropriate activation functions is essential for effective training and network performance.

4.3 Optimization Algorithms

Optimization algorithms, such as stochastic gradient descent (SGD) and its variants (e.g., Adam, RMSprop), determine how the network’s parameters are updated during training. These algorithms aim to find the optimal values of the parameters that minimize the loss function.

4.4 Regularization Techniques

Regularization techniques, such as dropout and weight decay, help prevent overfitting in deep neural networks. Overfitting occurs when the model performs well on the training data but fails to generalize to new data. Regularization techniques introduce constraints that encourage the network to learn more robust and generalizable representations.

Advertisement

5. Applications of Deep Learning

Deep learning has found applications in various domains. Here are some notable examples:

5.1 Computer Vision

Deep learning has revolutionized computer vision tasks, such as image classification, object detection, and image segmentation. Models like convolutional neural networks (CNNs) have achieved remarkable accuracy in tasks that were previously challenging for traditional computer vision algorithms.

5.2 Natural Language Processing

Deep learning models, particularly recurrent neural networks (RNNs) and transformer-based architectures, have made significant advancements in natural language processing tasks, including machine translation, sentiment analysis, and language generation.

5.3 Speech Recognition

Deep learning has played a pivotal role in improving speech recognition systems. Recurrent neural networks (RNNs) and convolutional neural networks (CNNs) have enabled more accurate speech recognition, enabling applications like voice assistants and transcription services.

5.4 Healthcare

Deep learning has shown promise in healthcare applications, such as medical image analysis, disease diagnosis, and personalized medicine. It helps analyze medical images, detect abnormalities, and predict disease outcomes.

5.5 Autonomous Vehicles

Deep learning is a critical component of autonomous vehicle technologies. It enables tasks like object detection, pedestrian recognition, and lane detection, facilitating safer and more efficient self-driving vehicles.

Additional Read: Understanding Artificial Intelligence (AI)

Advertisement

6. Advantages and Challenges of Deep Learning

6.1 Advantages

Deep learning offers several advantages:

- High Accuracy: Deep learning models have achieved state-of-the-art performance in various tasks, surpassing traditional machine learning algorithms.

- Feature Learning: Deep learning models can automatically learn relevant features from raw data, reducing the need for manual feature engineering.

- End-to-End Learning: Deep learning models can learn directly from raw data, allowing for end-to-end learning pipelines without the need for intermediate steps.

- Scalability: Deep learning models can scale to large datasets and complex problems, making them suitable for big data applications.

6.2 Challenges

Deep learning also presents challenges:

- Data Requirements: Deep learning models typically require large amounts of labeled data for training, which can be costly and time-consuming to obtain.

- Computational Resources: Training deep neural networks can be computationally intensive, requiring powerful hardware and significant processing time.

- Interpretability: Deep learning models can be seen as black boxes, making it challenging to interpret and explain their decisions or predictions.

- Overfitting: Deep neural networks are prone to overfitting, necessitating regularization techniques and careful model selection.

- Hyperparameter Tuning: Choosing appropriate hyperparameters for deep learning models requires expertise and careful experimentation.

7. The Future of Deep Learning

The future of deep learning is exciting, with ongoing advancements and research. The field is continuously exploring new architectures, optimization algorithms, and regularization techniques. The integration of deep learning with other technologies, such as reinforcement learning and unsupervised learning, holds potential for groundbreaking applications.

8. Conclusion

Deep learning is a powerful subset of machine learning that leverages artificial neural networks to learn complex patterns and make accurate predictions or decisions. With its remarkable achievements in computer vision, natural language processing, and speech recognition, deep learning has transformed various industries. While it offers advantages such as high accuracy and automated feature learning, challenges related to data requirements, computational resources, and interpretability persist. As research progresses, deep learning will continue to drive innovation and shape the future of AI.

Advertisement

9. FAQs

Q1: What is deep learning?

Deep learning is a subfield of machine learning that focuses on training artificial neural networks with multiple layers to learn hierarchical representations of data and make accurate predictions or decisions.

Q2: What are the types of neural networks used in deep learning?

Common types of neural networks used in deep learning include feedforward neural networks, convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs).

Q3: What are some applications of deep learning?

Deep learning has applications in computer vision, natural language processing, speech recognition, healthcare, autonomous vehicles, and more.

Q4: What are the advantages of deep learning?

Advantages of deep learning include high accuracy, automated feature learning, end-to-end learning, and scalability to complex problems and large datasets.

Q5: What are the challenges of deep learning?

Challenges of deep learning include data requirements, computational resources, interpretability, overfitting, and hyperparameter tuning.